ChatGPT from OpenAI has quickly made a name for itself in the tech world. The platform-independent, user-friendly interface has enabled IT professionals and non-professionals alike to immerse themselves in the world of Large Language Models (LLMs).

According to a Bitkom survey, 74% of the 605 companies surveyed will invest in artificial intelligence over the next few years. Generative AI offers new and groundbreaking opportunities to increase revenue, reduce costs, increase productivity and better manage risk. In the near future, it will become a competitive advantage and differentiator.

In a recent Gartner[1] webinar survey of more than 2,500 executives, 38% said that customer experience and retention is the primary goal of their investments in generative AI. Other goals include revenue growth, cost optimization and business continuity. Decision-makers can see a clear impetus for action here: Generative AI can and will be used as a key technology in many areas of the company. The spectrum ranges from IT, where code is generated automatically, to research & development for data-driven analyses. The influence of generative AI can even be seen in marketing, where personalized advertising measures or image generation are in demand.

In this article, we want to show you how you can utilize the potential of Large Language Models in customer interaction and what investments are necessary to integrate this groundbreaking technology into your company. It’s not just a question of “if”, but “when”. It’s time to capitalize on the strategic advantage of Generative AI.

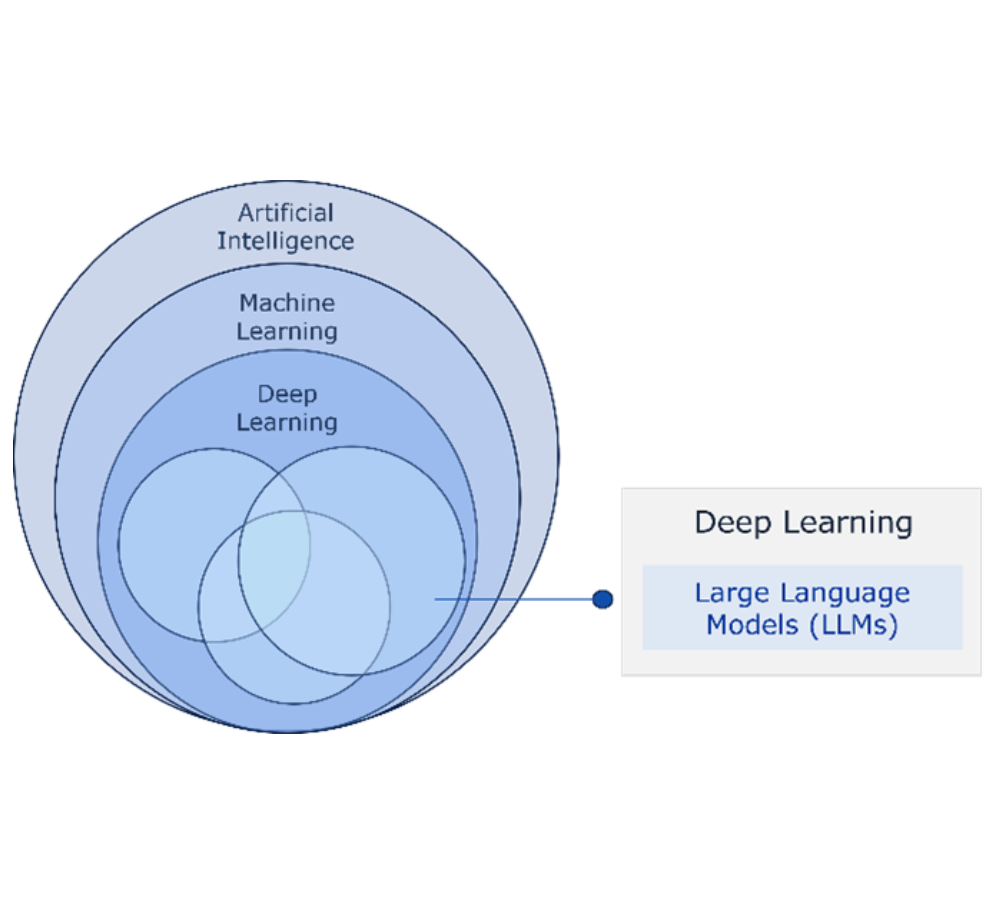

Generative AI represents the next wave in AI development. Where we once taught machines to tell a cat from a dog, generative models now allow us to create content from scratch, be it text, audio or images. In the AI landscape, Generative AI is a subset of Deep Learning, which in turn is a subset of Machine Learning. LLMs such as ChatGPT are particularly important here. These models can not only process information, but also generate their own content.

In essence, generative models such as LaMDA, LLaMA or GPT use huge amounts of data and use “transformers” to process this information. However, this can lead to “hallucinations” – nonsensical or incorrect statements by the model. The reasons can be manifold, such as a lack of data, unclean training sets, overfitting or unclear constraints.

Collecting high quality and diversified data is crucial, but concepts such as prompt design and grounding should also be considered.

- Prompt design: A good prompt guides the model to give high quality answers. This means that the way you ask the model will influence the type of answer it receives.

- Grounding: This involves “grounding” the model to specific sources of information. Whether a fact base, document reference or knowledge base – grounding ensures that the AI bases its answers on reliable data.

Depending on the available data, there are different forms of generative AI, each of which provides suitable model types for specific problems. We focus primarily on approaches that are based on natural language processing. In particular, we differentiate between the text-to-text and text-to-task model types. Hugging Face offers a comprehensive overview of various models in this area.

The “text-to-text” model type uses natural language in text form as input and also provides text as output. These models are trained to understand the mapping between two texts. Examples of applications include summarizing information, translating, extracting data, clustering content, rewriting/describing content, classifying text or generating new text.

In contrast, “text-to-task” models are designed to perform specific tasks or actions based on text input. The range of possible tasks is broad: from answering questions and carrying out search queries to making predictions. For example, a “text-to-task” model could specialize in executing specific commands in a user interface. With the help of these models, companies can implement advanced software agents, virtual assistants and far-reaching automation solutions.

When used correctly, generative AI can generate significant value in customer communication. It is essential that communication channels – whether customer-facing or internal – are designed to be efficient, secure and reliable. A certain level of maturity in the application of AI is essential here. With the necessary volume of data behind it, generative AI can identify trends and patterns, generate new content, automate processes and thus take customer interaction to a new level.

Software agents und virtual assistents

Think of generative AI as a kind of digital assistant that improves and optimizes your employees’ interactions with customers. A concrete example: After a customer conversation, the AI assistant automatically creates a summary, which is stored in the CRM system. When contacting the customer again, employees can access these notes immediately, allowing them to respond better to the customer and avoid wasting valuable time on research. In addition, the AI can merge data across systems and thus, for example, link relevant information from the company’s own knowledge database and the CRM system, derive resulting recommendations for action and even suggest suitable contacts for specific topics. McKinsey’s “Lilli” platform offers an innovative practical example. This internal tool is fed with the company’s own research papers and acts as a source of knowledge for employees. If you enter a query into the system, Lilli searches the data pool and presents the most relevant content, summarizes key points, provides direct links and even introduces experts on the topic. Such applications show the potential of linking generative AI and company data.

The implementation of Generative AI in companies harbors both great potential and significant investments. The exact costs depend heavily on the individual requirements, scope and objectives.

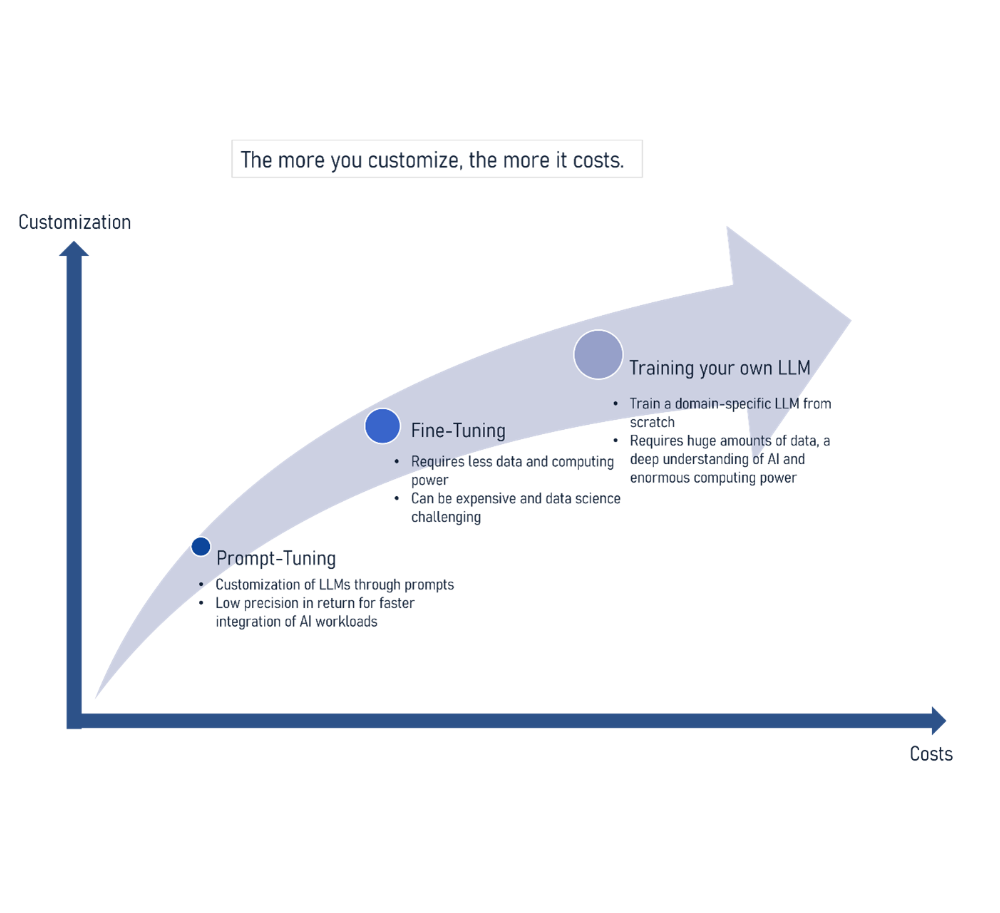

The implementation of LLMs can be done in different ways. Here are three primary approaches:

- Training LLMs from scratch (most expensive): This is the most costly method. Companies that opt for this develop and train their own customized models. Although this allows for a highly personalized solution, it also requires enormous amounts of high-quality data and corresponding resources in terms of expertise, funding and time. A renowned example of this is the Bloomberg solution.

- Fine-tuning of an existing LLM (individually dependent costs): This approach modifies pre-trained models through additional training with specific data. The big advantage here is efficiency: less data and computing power is required than when training a model from scratch. The process involves adjusting specific parameters of the pre-trained model, making it better tailored to specific tasks.

- Prompt tuning of an existing LLM (low cost): The most cost-effective approach. Here, a pre-trained model is used through targeted prompts. Although this method is simple and inexpensive to implement, it may offer less added value in the long term as the model is not specifically optimized for company-specific tasks.

For larger companies or those that place particular emphasis on comprehensive data analysis, high security standards and the protection of intellectual property, customized solutions are often essential. This can mean investing in licensed, customizable models and working with specialized providers and partners. The costs here can run into the millions. The general costs are therefore essentially divided into:

- Inference costs: Costs incurred when an LLM is called up to generate answers.

- Tuning costs: Costs for adapting an LLM to specific requirements.

- Pre-training costs: Investment required to train an LLM from scratch.

- Hosting costs: These are the costs of providing and maintaining an LLM, especially if it is made accessible via an API.

Generative AI has quickly established itself as a hotly debated topic in the technology industry. The predictions are promising and point to enormous progress and innovation in this area. It is predicted that companies that invest in generative AI early and implement it correctly will have a clear competitive advantage.

Strategic importance for companies:

Companies need to take a strategic view of generative AI in order to recognize its impact on their business model and competitiveness. An untimely entry can be detrimental. It is also important to consider the consequences of AI for employees.

Ethics and regulation:

AI offers advantages, but also brings new ethical challenges in the areas of data protection and security. Companies must adhere to the concept of “Responsible Artificial Intelligence” and comply with regulations such as the AI Act“. It is also crucial to clarify copyright and exploitation rights when implementing AI in order to protect intellectual property and avoid legal problems.

Education and awareness:

For a successful implementation of AI, it is crucial that both managers and employees have basic knowledge in this area. A comprehensive understanding of how AI works, what data it requires and how it can be used safely and ethically is key. Liongate is your partner for the successful and sustainable introduction of AI for your customer interaction:

- We advise you on the identification and elaboration of relevant use cases.

- We work with you to develop initial AI prototypes and evaluate the use of generative AI for your customer interaction

- We build and operate innovative and secure cloud solutions for customer interaction. As an AWS and Salesforce partner, we use the most innovative platforms.

Get first answers.

Take advantage of our free analysis talk.

Get in touch with one of our advisors without obligation. You can clarify your questions in a personal conversation. You will receive recommendations for the next sensible steps and discuss what the roadmap could look like in a cooperation.